Hi, I am a guy in early thirties with a wife and two kids and whenever I go on youtube it always suggests conservative things like guys dunking on women, ben shapiro reacting to some bullshit, joe rogan, all the works. I almost never allow it to go to that type of video and when I do it is either by accident or by curiosity. My interest are gaming, standup comedy, memes, react videos, metalurgy, machining, blacksmithing, with occasional songs and videos about funny bullshit and I am not from america and I consider myself pretty liberal if I had to put it into terms used in america. But european liberal, so by american standards a socialist. Why does it recommend this shit to me, is this some kind of vector for radicalization of guys in my category? Do you have similar experience?

You most probably viewed these types of videos a few times and the algorithm started recommending them to you. It only takes a couple of videos for the algorithm to start recommending video of the same topic. You could easily solve this by clicking on the 3 dots next to the video and then selecting “Not interested”, do it enough times and they’ll be gone from your feed.

This, the algorithm doesn’t care whether you

likeenjoy it or not, it cares whether you engage with it or not. Even dislikes are engagement.I am constantly bombarded with Jordan Peterson videos despite disliking them and telling the algorithm to show less like this.

I’m not sure how it profiles people, but it sucks.

Go into your watch history and remove them after disliking it. I find removing stuff from my watch history to have a bigger impact over disliking stuff. You can also have YouTube stop recommending specific channels to you. Odds are if they’re posting Jordan Peterson content, you’re not missing anything of value by blocking them

Disliking is engagement. Instead tap the three dots and select “not interested”

You don’t even have to watch those types of videos. I got watching Star Trek lore videos etc. And got off on to one channel in particular. Whose videos always ended up devolving into rants against SJW and leftists. This from someone who posts lore videos on a show about luxury gay space communism. But simply because of apparently a large portion of his viewership also engages with the bigotry and hatred. I started getting tons of recommendations for shit that I have never watched and would never watch.

But yes Mark not interested and delete from history was the best way to get it out.

If I accidently watch a Linus Tech tips video, that will be all it recommends me for the next month.

I watched a Some More News video criticizing Jordan Peterson, and Google thought “did I hear Jordan Peterson? Well in that case, here’s 5 of his videos!”

Almost all content algorithms are hot garbage that are not interested in serving you what you want, just what makes money. It always ends up serving right wing nut jobs because that conspiracy theorists watch a lot of scam videos.

Edit: my little jab at Linus has nothing to do with politics. I have no idea what his views are. I only mentioned it to point out how YouTube will annihilate my recommendations if I watch a single one of his videos.

To be fair, you did watch a 3 hour JP video…

That’s my point, the algorithm doesn’t understand context.

I watch Linus from time to time, but don’t get that sort of recommendation (unless I watch some gun videos!). I only watch his tech stuff and don’t know anything about his politics. Now I’m worried.

Oh I didn’t want to imply that Linus puts out political opinions. Of the few videos I’ve seen of his it’s all tech hype videos. I was only giving an example of the algorithm deciding to nuke my recommendations if I watch one of his videos.

If you watch any kind of gaming videos, and haven’t trained your algorithm, then you’ll get flooded with this shit

Like others have said, the things you watch are prime interests for right wing in the US. You have to train the algorithm that you don’t want it.

I think the algorithm is so distorted by right-wing engagement that it will end up recommend right-wing content, even if you actively try to avoid it. I watch youtube shorts and I always skip if it’s Shapiro, Peterson, Tate or Pierce Morgan. I also skip the moment I feel like the shorts might be right-wing. Scroll enough and eventually the algorithm will go “How about some Shapiro, Peterson, Tate or Morgan?” Give it enough time and it will always try to feed you right-wing content.

I suppose if you do nothing but scroll YouTube endlessly, it just starts to recommend anything to keep you in the platform.

But I’ve had an account since they started and even watch The Young Turks and C-Span here and there and almost never get anything political, let alone right wing.

My algorithm is at the point where I get Korean commercials, which is honestly fine with me.

Idk about that tbh , I have an account , and my algo is pretty trained , and there are times I have even told youtube to not suggest me peterson and shapiro stuff ! I moslty watch leftist content ! But i still get ben shapiro reacting to barbie and what not ( I am not even an american)

The best way to tune the algorithm on Youtube is to aggressively prune your watch/search history.

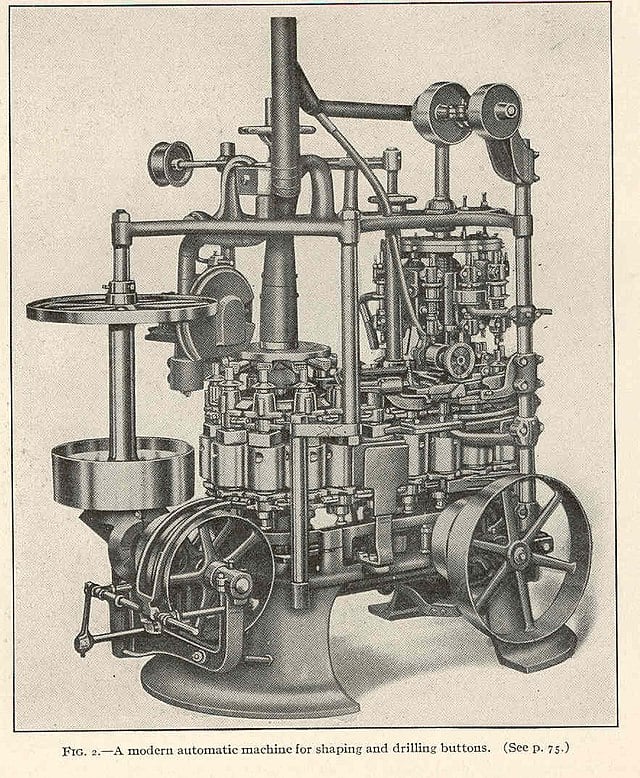

Even just one “stereotypical” video can cause your recommendations to go to shit.Machining and blacksmithing are highly correlated with right wing BS in the US. Check my uname and ask me how I know. 😁

This one may not be so obvious, but I’ve seen someone lose their marbles over the crazy idea that they get recommended stuff for children when all they watch on YouTube is Minecraft videos and sometimes Roblox. And people in the comments agreed…

The YouTube subreddit wasn’t full of bright people.

I almost never allow it

The times you do allow it are all the algorithm cares about, sadly. Any kind of engagement is great for companies.

“Hate Rogan? Cool, watch some Rogan as hateporn, hate watching is still watching.”

If I use a private window, and don’t log in I get a lot of right-wing stuff. I’ve noticed it probably depends on IP/location as well. If at work, youtube seems recommend me things other people at the office listen to.

If I’m logged in, I only get occasional right-wing recommendations interspersed with the left-wing stuff I typically like. About 1/20 videos are right-wing.

YouTube Shorts is different. It’s almost all thirst-traps and right-wing, hustle culture stuff for me.

It could also be because a lot of the people who watch the same videos you do tend to also watch right-wing stuff.

In general, the algorithm tries to boost the stuff that maximizes “engagement,” which is usually outrage-type stuff.

If they piss you off, you will stay on their platform longer, and they make more money.

That is the sad truth of EVERY social network.

Lemmy might not be that advanced yet, but as soon as they get big enough to need ads to pay for bandwidth and storage, soon after they will add algorithms that will show you stuff that pisses you off.

One way to combat this is to take a break from the site. Usually after a week, when you come back it will be better for a while.

I think it has more to do with the stuff you watch than wanting to piss you off.

All YouTube recommends to me are videos of kpop, dog grooming, Kitten Lady, and some Friesian horse stable that went across my feed once. Oh, and some historical sewing stuff.

If they started recommended stuff that pissed me off, I wouldn’t bother going back except for direct videos linked from elsewhere.

Edit: Rereading what OP said they watch, their interests are primary interests of the right wing in the US. If they don’t train the algorithm they don’t want it, the algorithm doesn’t know that those interests don’t intersect.

Lemmy might not be that advanced yet, but as soon as they get big enough to need ads to pay for bandwidth and storage, soon after they will add algorithms that will show you stuff that pisses you off.

Who is the “they” that is going to implement what you claim? And how are they going to do that, specifically? Lemmy isn’t reddit or youtube, technically. There is no central authority. Lemmy.world can’t change how this technology works just because they might want to start injecting recommendations or ads. That’s the point of this system.

Who is hosting this? Lemmy.ml, all the federated sites? With the reddit exodus there is probably a lot more activity. Who’s paying for that? That’s who they would be in this situation I think.

Your interests have a strong correlation with people on the right aside from maybe react videos.

But even if your interested were not so strongly correlated with the right, you would probably still get right wing ads or videos suggested. They garner the highest engagement because it is often outrage porn. Google gets their money that way. My subscriptions are to let wing political channels, science, and solar channels but I still get a decent amount of PragerU and Matt Walsh ads. Reporting then does not stop them from popping up either.

You need to train your algorithm.

When you hover over those videos there will be three dots in the lower right hand corner use either not interested or don’t recommend this channel to clear the right wing trash from your feed.

On videos you do like you need to do some engagement, watch complete videos, thumbs up (or down it doesn’t matter) and comment.

Do that for a few days and your feed should clear up.

deleted by creator

I’m a daily youtube user and this has been not been the case for me.

My feed, outside the occasional straggler, is pretty free of nonsense.

Idk I’ve spent the last year doing what OP says and my youtube algorithm knows me so well I lose hours upon hours. It is like a firehose of all my hobbies. I don’t get any politics recommended to me at all, right or left.

I’m 30 and have a small family, too. When I watch shorts on YouTube I get the exact same content you’re describing. None of the long videos I’m watching are political, yet the Algo keeps throwing them at me. I get a lot of Jordan Peterson crap or lil Wayne explaining how there’s no racism. I hate it.

You’ve probably watched the other side quite often, so YouTube is recommending this for ya to enrage you and keep you engaged. Just good old rage bating

Social Media in general has that habit of furthered spreading far right content and dragging people into such content bubbles.

Conseratives and fascists are the same group, so I’ll refer to them as fascists.

You are talking about one of the core criticisms of corporate secret algorithms to determine what to influence you with. Fascism is forced to creep into everyones world view when you use standard social media, and the average person wouldn’t have the slightest idea. Certain key things will be more related to fascist content, like philosophy, psychology, guns, comedy. If you think about what fascists enjoy, or what they need to slander then it makes what I said make more sense.

Jordan Peterson does a lot of vids around psych/philosophy to redirect curious people to false answers that are close to true but more agreeable for fascists. An example of a psychological cooption is “mass psychosis” being coopted into “mass formation psychosis” by fascists. Mass psychosis explains too many true things, where mass formation psychosis redirects people towards a more palletable direction for them.

This is why I want to be nowhere near corporate media if possible. If you delete your cookies(or private browse for the same effect) then youtube will promote the most adjacent things to what you watch like old youtube used to do, although it’ll still promote fascism when directly adjacent. With cookies though they have an excuse to have questionable content linger statistically too often.

You actually believe that every single conservative is a fascist? Jesus Christ political literacy is dead